The simulation can continue to evolve the population to adapt to potentially changing situations as long as it is left running. The dynamic learning capability can be turned off in order to create agents with specific levels of difficulty, to compete against humans.

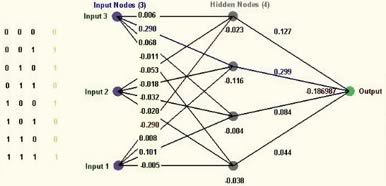

Individual NNs are each internally defined as an object that usually equate to sensors as inputs, and the outputs usually influence (see fuzzy logic) triggers, game states, or various decision-making functions. Values of internal "weights" receive slight modifications during the offspring mutation process, which means that a child (agent) may be a close copy of the parent(s), but with slightly different capabilities, giving the child a chance to perform with slight differences compared to the parent(s), as in nature.

In artificial intelligence (AI), there are several methods to use depending on the situation and the brief explanation below is only the "tip of the iceberg." In the old days of AI scripting, everything was coded by hand using the "brute force" method, which resulted in many thousands of lines of complex code ("expert system".) The methods explained below can also be added to an existing expert system, or completed game, to enhance the ai even further. Usually, these methods are programmed onto an intelligent agent (aka: software agent or NPC.) Within a software simulation, a population of agents is set-up with physical parameters (speed, turning, etc.) and cognitive (decision) capabilities and behaviors (triggers.) A scoring system can compare relative performance which may include short term and long term goals.

Russell & Norvig, Artificial Intelligence: A Modern Approach, Pt.1 Ch.2), most games do not include any form of adaptive learning capability due to the complexity of the programming. My focus to programming Agents has been to increase traditional pre-programmed capabilities by including adaptive learning through the use of artificial object Neural Networks (NN), Fuzzy Logic, and Evolutionary Computation (EC). EC allows the capabilities of the agents to adapt since, at the end of each round of testing and scoring, the system will save the best performing individuals in the population and make offspring from the survivors, discarding the poor performers (which may have been killed-off in the simulation). When offspring are created for this next generation, they may be combined between two parent individuals (if desired), and small mutations (errors) are added to these newly created individuals (agents) using a Gaussian distribution function (see bell-curve graphic) which closely approximates what happens in nature when genes are copied and mutated and these mutations affect the internal variables associated with the capabilities, such as rate-of-acceleration, aggressive versus defensive ratio, etc. Depending on the desired speed of producing usable results versus highly optimized results and the complexity of the scoring function, the simulation can run on one computer for a relatively short time (seconds, minutes, hours, days) or be set-up on a distributed computing network and be left running (for weeks or months), and as long as it is left running, the individuals can be "trained", or directly compete against each other for survival or some other scoring function that can determine which are the "best-fit." Over many generations, the survivors become more optimally adapted to the parameters within the simulation, which in real-life is to survive to pass on genetic material (from the parents) and produce viable offspring. Two words: natural selection.